Sifting through the AI fear porn

Civil Liberties and Emotional Manipulation are the two biggest dangers

TL;DR - Most of what is in the news on AI right now is fear porn. How can you tell? It hovers over the topic without focusing on what dangers exist and fails to offer any ways to think through the dangers and manage the risks. Its like that police helicopter circling your neighborhood, but no one is saying anything about why. The two biggest dangers are law enforcement using AI alone to claim probable cause, and emotional experimentation is being done on us without our knowledge or consent.

Data, Information, and Knowledge

Believe it or not, Artificial Intelligence (AI) can be simplified. But first we must discuss “Knowledge Management” (KM). Imagine a spreadsheet. Each “cell” contains “data.” Each piece of data - by itself - does not have any meaning beyond the symbols (i.e. letters, numbers, punctuation, etc.). Once these data are arranged in columns and rows, it is this “context” that brings meaning to the data.

Hence the textbook definition of the word “information” - “data in context with other data.” Consider a spreadsheet where the second and following rows have a first and last name in the first and second column, respectively (columns A and B). In the first row there are “labels” - “First Name” and “Last Name” - in those two columns. The cells in rows two and following contain data. The labels in the first row - column headers - are “meta-data,” which is simply “data about data.”

Now add in Column C: “DOB” in row 1. Add calendar dates to the right of each first/last name. Apart from this contextual arrangement of rows, columns, and meta-data these calendar dates are nothing more than that - calendar dates. But in this contextual arrangement it is obvious they are birth dates. Let’s imagine first and last are mine: John Horst. Let’s add in Column C, Row 2 (C2) the following date: 8/1/1967. (I was born in 1967, just not on August 1.)

Information + Math = Knowledge

Or maybe let me state the header here as a function: Knowledge = Math( Information );

This is critical to understanding AI. In KM, data is brought into context with other data to create information. Information then becomes knowledge by way of math. Let’s add a fourth column (Column D) with “Age” as the header (row 1). In row 2 we’ll do some math (using Excel functions): =DATEDIF(C2, TODAY(), "y"). "C2" is the cell that has the birth date. The TODAY() function return the current date. DATEDIF simply shows the difference between the two. The “y” at the end says “show me the year difference.”)

Now we have knowledge. We went from data (the cells) to information (arranging the cells in rows and columns) to knowledge (doing math on the information). We know I am 55 years old. (It seems that is valuable to AARP and others who send me junk mail.)

AI is just fancy statistics

Now I am going make the AI crowd really mad at me. AI is simply the application of statistical methods (math) to information (a “neural network”) which is a particular contextual arrangement of data.

Up to now, by using the spreadsheet as the means by which data gains context I have been assuming what is called set theory. We don't need to get into those weeds other than to say there is a different theory by which we can arrange data - graph theory. We do need to explain a few things about graph theory, but it is more important to remember the basics of KM - data becomes information and math is then used to discover knowledge.

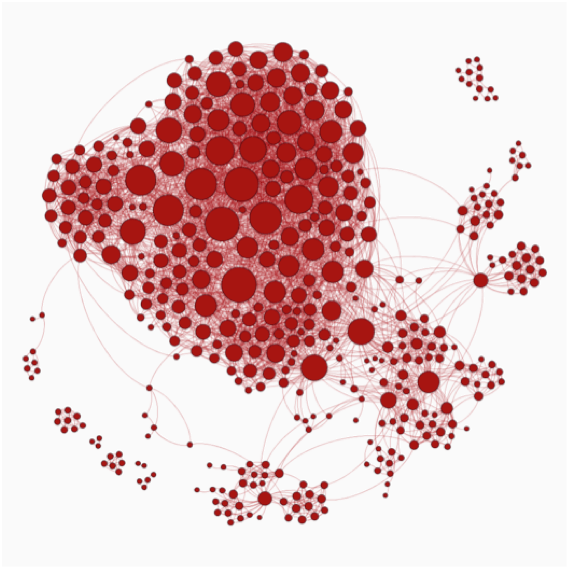

Graph data uses “nodes” (shown as small circles) to represent nous - people, places, and things. “Edges” (shown as lines connecting the nodes) represent interactions between nodes as verbal phrases. “Labels” are attached to nodes and edges. This is often called a “neural network” because it follows the neuron (node) - synapse (edge) pattern of the human brain.

If AI is fed a massive amount of this graph data, it can recognize patterns of correlation otherwise invisible. This is “unsupervised learning.” All it can do, though, is recognize patterns - it cannot interpret them. This is high school science. If you find a statistical correlation between things, you form a hypothesis, create a study group and control groups and do an experiment. Unsupervised learning can discover the correlations. But from there someone - a data scientist - has to form a hypothesis (perhaps concerning cause and effect), segment the data into a study group and control groups, and run one or more experiments.

Another form of AI is “supervised learning.” This relies on humans to tag - or label - nodes in the graph. The biases of the humans doing the labeling will directly influence what AI supposedly “learns” about the data, and therefore any apparent correlations which then drive experiments.

Business Model of Big Tech

It is at this point we need to simplify the business model of Big Tech: the monetization of the discovery of knowledge about us. Common wisdom says if the tech product is free, then you are the product. Well, not quite. Their product is the discovery of knowledge about you. So here you have it: AI is an execution of KM principles using data arranged in accordance with graph theory to which statistics are then applied to discover (and then sell) knowledge.

So where is the danger? Why is that helicopter circling overhead again? Now we need to categorize the use of KM. I’ll choose two to start with and keep it simple. Law enforcement and audience engagement (I’ll explain how audience engagement covers both media and politics).

By now you have probably heard about “deep fakes.” That’s the shiny object right now. This is called Generative AI, and it is quite impressive when you see a painting or cartoon drawn to a plain language prompt - or have AI write a paper for you. Will students try to cheat? (Hello??? Are they really asking that question?) Will politicians create fake videos to attack an opponent? (Again… Hello???) Or maybe the best one yet: Will people no longer trust what is reported to them on the news. (That one gets all caps HELLLLLOOOOO???)

Law enforcement gets lazy

There is very real danger here. But no, the robots are not going to take over the world. That’s plain fear porn. It works because you can make movies with cool special effects around that plot. Nope, the real danger is whether our civil liberties can be taken from us based purely on statistical inference.

The Supreme Court defines “reasonable suspicion” as “the sort of common-sense conclusion about human behavior upon which practical people... are entitled to rely.” This is a pretty low standard and simply means law enforcement “sees” something that gives rise to a suspicion that some criminal activity may be imminent.

Then there is “probable cause.” This is a higher standard that requires clearer justification to believe a crime is in process or has been committed, or is about to be committed. This is required for law enforcement to get a warrant either for a search or an arrest.

While “Artificial Intelligence” sounds mystical and esoteric, it is nothing more than statistical inference. Granted, bringing data together into context using graph theory (i.e., creating a “neural network”) is quite a bit more complex and sophisticated than a spreadsheet. But once the information is available for analysis - as a set or a graph - that analysis does not amount to anything more than sophisticated statistics.

We must decide if “statistical inference,” by itself, is sufficient to create reasonable suspicion and/or probable cause. If we allow this, then we have ceded civil liberties to nameless, faceless people like me slinging code in a cubicle in some tall building somewhere.

Emotional experimentation

The other danger arises from a combination of our own use of social media platforms and Artificial Intelligence. Your use of social media is being transformed into an emotional dossier on you. And if you don’t believe this - or find it conspiratorial - watch “The Great Hack” on Netflix.

Your activity on social media, as well as browsing history, search terms, etc. are all discrete pieces of data brought together on what is called the “social graph.” Especially interesting is your use of emojis, and the use of language models to gauge emotions.

The technology and math are complex, but this really boils down to a science experiment a high school student can understand. Segment the social media population into a study group, and then into control groups, send them content, and then analyze how they interact with it. You can literally prove what kinds of content and topics will be most likely to make your study group angry or afraid (or both).

Media and politics are now the same thing

In the news business it used to be “if it bleeds it leads.” Today, if it pisses you off, it makes it onto your news feed. None of the major news media outlets - including Fox News and MSNBC to use two more extreme examples - are “conservative” or “liberal” per se. Rather than red or blue, they are decidedly the color of money - green.

They have identified their audience segment not merely by age and income, but by political orientation. Fox went out and got older conservatives as their audience. Now they have to keep that audience engaged. They start by identifying their audience’s “other” and then make sure to feed their audience news about their “other” that will make them angry at and afraid of their “other.” Having used fear and anger to drive audience engagement, they simply hand off that audience’s attention to their advertisers.

Of course MSBNC does exactly the same thing. It just seems the outlets serving a left-leaning data segment need to gaslight their viewers with ever more outrageous claims (e.g. everything is racism and white supremacy now).

But where this really gets interesting is how political consultants have discovered that the same issues that keep a social media segment engaged in a media product are what gets a segment of voters to the polls: fear and anger. There is no difference any longer between media and political consulting. Do not be surprised when your party’s political candidates are stumping on the same issues that make the top of your social media feed. This is not a coincidence.

So the real question for us when it comes to AI are these: Will people be searched simply based on an AI model prediction? Or worse yet, will they be arrested solely on such statistical inferences? And are we - as social media users and customers of Big Tech - essentially being experimented on? Are data science experiments creating control groups online without our knowledge, feeding us content designed to provoke emotional distress, and then analyzing the difference in reactions?

The value of this kind of knowledge to media companies and political consultants is just too high not to dig in to these questions. (That means you Taibbi/Racket, Weiss/Free Press, Schellenberger/Public, Lowenthal/Network Affects, etc.)

I like this approach to discussing AI, focuses on the real dangers instead of the advertising doom and gloom of robots surpassing human intelligence, the latter is really condescending to us humans and our cognition.